Novel View Synthesis of Structural Color Objects Created by Laser Markings

Abstract

Transforming physical object into its high quality 3D digital twin using novel view synthesis is crucial for researchers in the domain of automatic laser marking of any color image on different metal substrates. Current Radiance Field methods have significantly advanced novel view synthesis of scenes captured with multiple photos or videos. But, they struggle to represent the scene with shiny objects. Moreover, multi view reconstruction of reflective objects with structural colors is extremely challenging because specular reflections are view-dependent and thus violate the multiviewconsistency, which is the cornerstone for most multiview reconstruction methods. However, there is a general lack of synthetic datasets for objects with structural colors and a literature review on state-of-the-art (SOTA) novel view synthesis methods for this kind of materials. Addressing these issues, we introduce a novel synthetic dataset that is used to conduct quantitative and qualitative analysis on a SOTA novel view synthesis methods. We demonstrate different techniques to improve the scene representation of laser printed planar structural color objects, focusing on the 3D Gaussian Splatting (3D-GS) method, which performs exceptionally well on our synthetic dataset. Our techniques, such as using geometric prior of planar structural color objects while initializing scene with sparse structure-from-motion (SfM) point cloud and the Anisotropy Regularizer, significantly improves the visual quality of view synthesis. We design different capture setups to acquire images of objects and evaluate the visual quality of the scene with different capture setups. Additionally, we present comprehensive experimentation to demonstrate methods to simulate structural color objects using just captured images of laser-printed primaries. This comprehensive research aims to contribute to the advancement of novel view synthesis methods for scenes involving reflective objects with structural colors.

TLDR

This work is the result of my master thesis on “Novel View Synthesis of Structural Color Objects Created by Laser Markings”, in collaboration with AIDAM, Oraclase, MPI-Inf, and SIC.

This work marks the beginning of Novel View Synthesis (NVS) of structural color objects and opens up potential avenues for various future research directions and endeavors.

Beginners: This thesis will help beginners grasp the brief history of radiance field methods and essential preliminaries, such as the mathematics, tools, and concepts necessary to understand NVS methods. Furthermore, it will serve as a foundational guide for novice researchers interested in exploring the novel view synthesis of specular and shiny objects with high view-dependent colors, commonly referred to as Structural Colors or Pearlescent Colors.

Advanced Researchers: This thesis will also be a valuable reference for advanced researchers who may wish to revisit the fundamental concepts and access the StructColorToaster scene from new Structural Color Blender Dataset. Additionally, the thesis discusses approaches to improve results and outlines potential future research directions.

Technical Artists: This thesis provides an overview of how the new Gaussian Splatting technology can be applied to various use cases and demonstrates the life cycle of a specific use case in interactive product visualization, from capturing the product to visualizing it in a web viewer.

Datasets

Structural Color Blender Dataset (Synthetic Scenes)

- StructColorToaster Scene: Access Blender source and dataset from 👉here

Real Scenes

- StructColorPainting Scene

- StructColorPaintingOld Scene

- StructColorPrimaries Scene

- StructColorTaylorSwift Scene

Evaluation

Structural Color Blender Dataset (Synthetic Scenes)

- Evaluation of selected SOTA methods on StructColorToaster scene:

| Method | Iterations | PSNR ↑ | SSIM ↑ | LPIPS ↓ | Train | FPS | Memory |

|---|---|---|---|---|---|---|---|

| NeRF | 50K | 23.359 | 0.8427 | 0.1895 | 1 day | 0.001 | 22MB |

| InstantNGP | 50K | 19.659 | 0.8109 | 0.2068 | 23 min | 6 | 159.2MB |

| Mip-NeRF | 250K | 22.818 | 0.877 | 0.1263 | 1 day | 0.03 | 15.9MB |

| NeRFacto | 50K | 19.305 | 0.8056 | 0.2123 | 35 min | 0.6 | 168MB |

| Ref-NeRF | 250K | 27.5194 | 0.9142 | 0.1286 | 2 days | 0.3 | 8.2MB |

| 3D-GS | 30K | 24.0435 | 0.9065 | 0.1061 | 20 min | 140 | 77MB |

| 3D-GS | 60K | 24.3117 | 0.9070 | 0.1052 | 30 min | 140 | 77MB |

(The values for the Train time and FPS are approximate.)

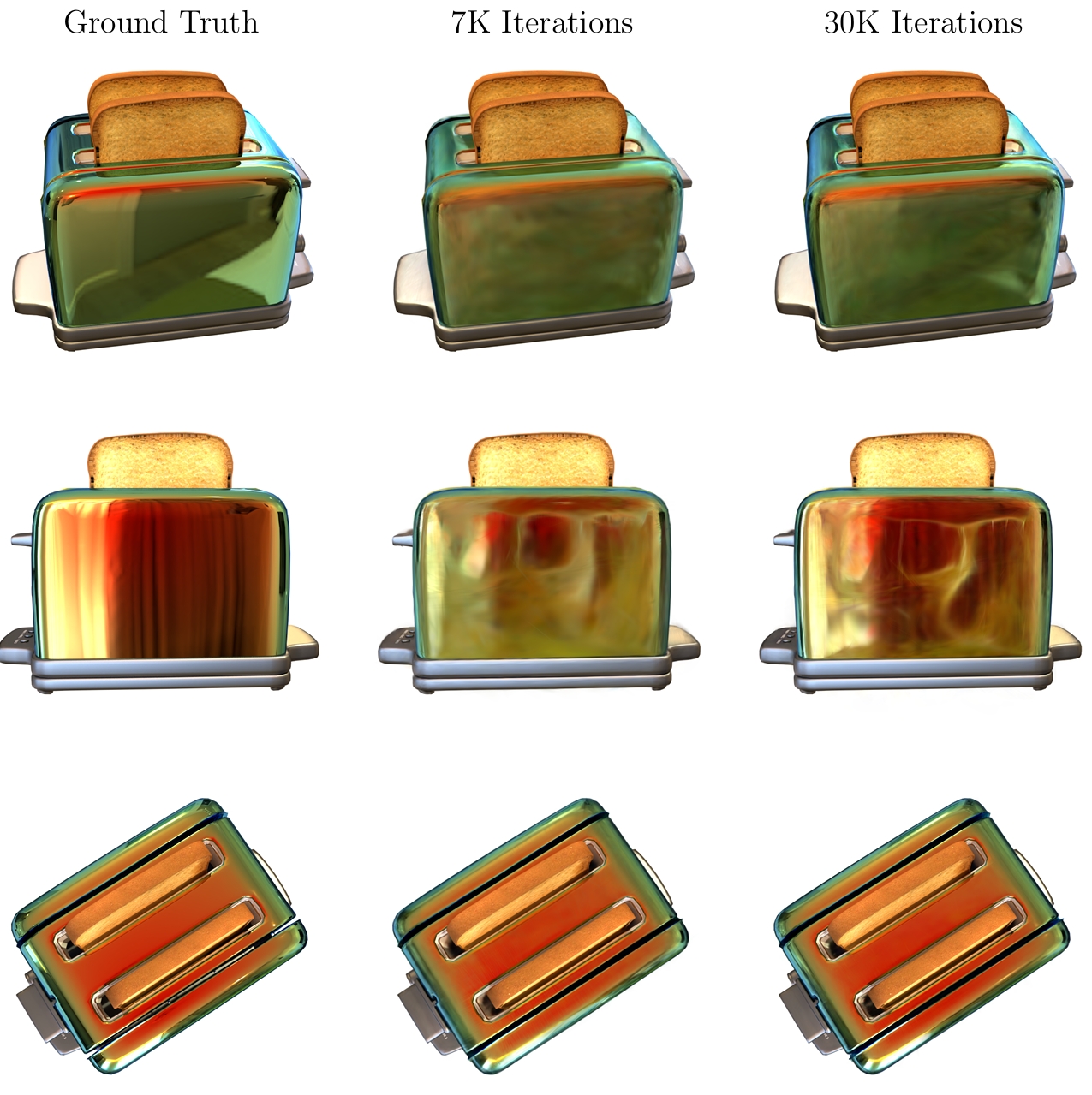

- Renders of optimized StructColorToaster Scene using 3D-GS:

Figure 1: The renderings show that 3D-GS able to capture the crisp shininess appearance of the surface. However, it does improve in learning specular reflections with increasing iterations, it is still not up to the mark and suffures from holes and aliasing effect aka popping artifacts.

Real Scenes

- Evalution of different choices on StructColorPainting and StructColorPaintingOld Scenes

| Method | 7K | 30K |

|---|---|---|

| Vanilla 3D-GS | 27.11 | 28.92 |

| Cleaned-SfM | 27.6715 | 29.1789 |

| With-Masks | 9.352 | 9.4603 |

| With-Cropped-Images/RGBA Images | 29.1759 | 30.0007 |

| With-Cropped-Images/RGBA Images: (No-Densification + No-Position Optimization) | 24.4612 | 25.089 |

| Densify Until Iteration = 30000 | 26.8325 | 29.4149 |

| Densification Interval = 30 | 25.2634 | 28.6454 |

| Densification Interval = 50 | 26.0189 | 28.2718 |

| Reset Opacity = Every 1000 Iteration | 27.7216 | 29.2581 |

| Reset Opacity = Every 2000 Iteration | 27.6354 | 29.1786 |

| Reset Opacity = Every 5000 Iteration | 27.82 | 29.2726 |

| SH-Degree 0 | 26.4699 | 27.9771 |

| White-Background | 27.6579 | 29.2025 |

| Random-Background | 27.7607 | 29.3303 |

| No-Densification | 17.7919 | 18.3929 |

| Reduced Number of Images = 297 | 27.3657 | 28.7223 |

| Anisotropy-Regularizer | 27.5747 | 29.4191 |

- Evaluation of selected techniques’ results computed over our StructColorPainting scene:

| Method | Iterations | PSNR ↑ | SSIM ↑ | LPIPS ↓ | Train | FPS | Memory |

|---|---|---|---|---|---|---|---|

| Cleaned-SfM | 7K | 27.6452 | 0.9221 | 0.1795 | 5.122min | 150 | 79MB |

| 30K | 29.1789 | 0.9342 | 0.1602 | 27.69min | 120 | 150MB | |

| Anisotropy-Regularizer | 7K | 27.5476 | 0.9223 | 0.1780 | 5.134min | 150 | 78.6MB |

| 30K | 29.9631 | 0.9346 | 0.1572 | 26.35 min | 120 | 152.4MB | |

| Anisotropy-Regularizer: 297-Images | 7K | 26.9920 | 0.9238 | 0.1833 | 5.812 min | 150 | 86.1MB |

| 30K | 29.3213 | 0.9339 | 0.1648 | 28.57min | 120 | 176.2MB |

- Evaluation of renderings using our techniques’ and gsplat with cleaned SfM and additional features computed over StructColorTaylorSwift scene:

| Method | Iterations | PSNR ↑ | SSIM ↑ | LPIPS ↓ | Train | FPS | Memory |

|---|---|---|---|---|---|---|---|

| Our Techniques | 7K | 30.2567 | 0.9338 | 0.1921 | 4.123min | 150 | 55MB |

| 30K | 33.6547 | 0.9458 | 0.1749 | 18.5min | 120 | 94MB | |

| gsplat | 7K | 31.8051 | 0.9391 | 0.1880 | 2.86min | 150 | 64MB |

| 30K | 34.6451 | 0.9511 | 0.1658 | 16.61min | 120 | 119MB | |

| gsplat + Mip-Splatting | 7K | 31.3528 | 0.9362 | 0.1922 | 2.54min | 150 | 66MB |

| 30K | 34.6654 | 0.9379 | 0.1827 | 17.1min | 120 | 124MB |

Videos

Real Scenes

RGB renders of interactive visualization in SIBR viewer of an optimized real world sences with our techniques:

StructColorPainting scene

StructColorTaylorSwift scene

Synthesized Scenes Using Just Primaries

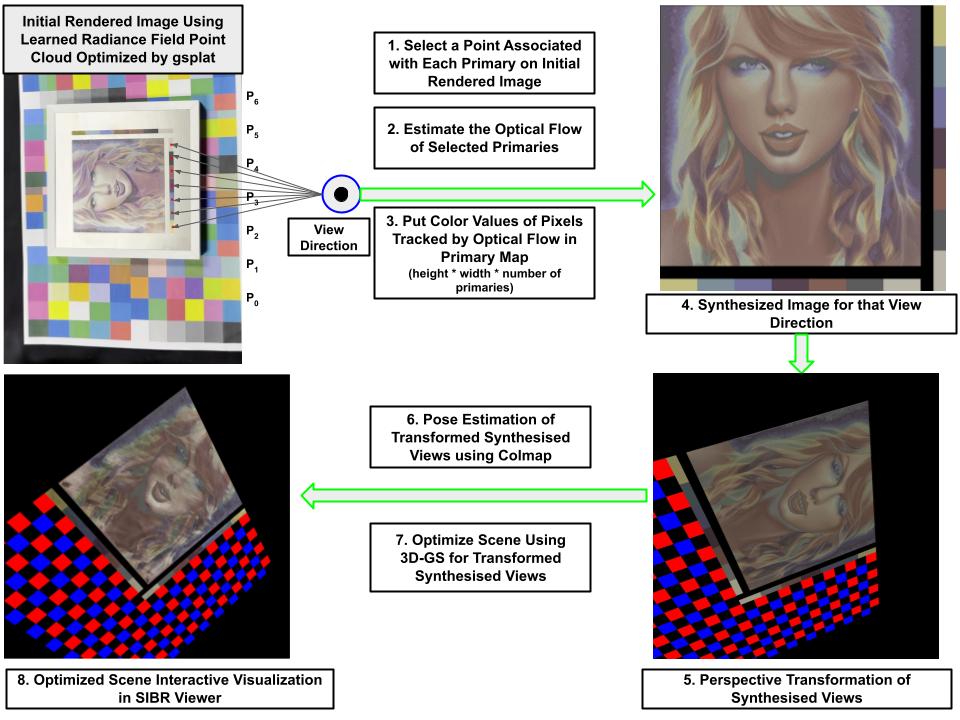

- Simulating Structural Color Object (Pseudo) before Laser Printing:

Figure 2: Pipeline of Synthesizing Arbitrary Images of Structural Color Object for Arbitrary Viewing Directions.

- Comparison between real structural color object views and respective synthesized views using just primaries for respective view directions:

StructColorTaylorSwift scene

RGB renders of interactive visualization in SIBR viewer of an optimized synthetic scene, generated using synthesized images created just with primaries:

- Synthesizing views using only the earlier tracked primaries for respective view directions, before laser printing the structural color object:

Synthesized views using just primaries for respective view directions:

RGB renders of interactive visualization in SIBR viewer of an optimized synthetic scene, generated using synthesized images created just with primaries:

3D Web Viewer

StructColorPaintingViewer is a webviwer to visulaize the structural color objects. The webviewer is implemented with help of gsplat.js but it only supports scenes optimized with spherical harmonics (SH) of degree 0. However, scenes optimized with SH0 don’t achieve the same quality as those optimized with SH3, which is evident from above videos of renderings of scenes optimized with SH3 in SIBR viewer.

BibTeX

References

1. [NeRF] Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. ECCV, 2020.

2. [InstantNGP] Thomas Muller, Alex Evans, Christoph Schied, and Alexander Keller. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans.Graph., July 2022.

3. [Mip-NeRF] Jonathan T. Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, and Pratul P. Srinivasan. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. ICCV, 2021.

4. [NeRFacto] Matthew Tancik, Ethan Weber, Evonne Ng, Ruilong Li, Brent Yi, Justin Kerr, Terrance Wang, Alexander Kristoffersen, Jake Austin, Kamyar Salahi, Abhik Ahuja, David McAllister, and Angjoo Kanazawa. Nerfstudio: A modular framework for neural radiance field development. ACM SIGGRAPH 2023.

5. [Ref-NeRF] Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T. Barron, and Pratul P. Srinivasan. Ref-NeRF: Structured view-dependent appearance for neural radiance fields. CVPR, 2022.

6. [3D-GS] Bernhard Kerbl, Georgios Kopanas, Thomas Leimkuehler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics, July 2023.